At understand.ai, we develop ML-based software to automate data labeling. Our work involves building and maintaining numerous models, which require regular updates and fine-tuning to meet our customers' specific needs. Optimizing our ML operations directly enhances the efficiency of bringing top-performing models to production.

What We Had Before

Our previous system relied on a Kubernetes cluster with a Kubeflow deployment to handle training and other data preprocessing steps on remote GPU machines. Since our operations are almost entirely Cloud-based, and Kubernetes is a cornerstone of our infrastructure, this setup initially made sense. Dataset and model management were based on an in-house solution, with data stored in buckets. We lacked a proper model registry as such, relying instead on individuals’ knowledge to find the right models—hardly an ideal situation.

While the system worked, it was cumbersome and challenging to maintain. Eventually, issues with incompatible Kubernetes and Kubeflow versions forced us to abandon the setup. Using Kubernetes for training also required a significant amount of engineering time for cluster maintenance and deeper expertise in Kubernetes within the MLOps team.

We quickly implemented an interim solution based on our own tooling, but for ML engineers, this didn’t provide a smooth development experience. It became clear we needed to modernize our MLOps tooling. And so the search for new tooling began.

The Search for a Solution

After thorough discussions within the team and gathering our requirements, we shortlisted a handful of tools for evaluation. Key features we prioritized included seamless job execution on remote Cloud machines, i.e., good Cloud integration, support for running complex pipelines on remote systems, and the usual MLOps tooling features such as experiment tracking and a model registry.

We compared tools ranging from fully-featured MLOps platforms to specialized tools requiring a chain of integrations. However, the benefits of having a single, fully-featured MLOps tool quickly stood out.

ClearML Integration with GCP via Autoscaler

ClearML integrates really well with Cloud environments through its Autoscaler feature. Launching a remote machine with whatever GPU you desire now takes just four lines of code. While initial configuration of the Autoscaler and queues is required, once set up, it is plain sailing.

To illustrate, imagine you’re an ML engineer with a training script in a repo. You want to experiment with parameters, datasets, and architectures, and you want to run the script with a GPU - but your local machine doesn’t have one. With ClearML, you can push your script as a ClearML task in a few lines of code. Your local environment is reproduced on the remote machine, complete with the repo, the specific commit you’re working on, and even uncommitted changes logged and re-applied. ClearML's Autoscaler has made it very easy to run model training on the Cloud.

Additionally, remote machines are automatically shut down after a period of inactivity, so your manager doesn't need to worry about unnecessary costs. Using the Autoscaler means that we no longer need to maintain a dedicated Kubernetes cluster for training tasks, freeing up engineering resources and reducing complexity.

Model Debugging with ClearML Session

While pushing scripts to remote machines is convenient, debugging them can be tricky. This is where the ClearML Session feature really shines. It’s a command-line tool with various modes, one of which is debug mode - you should check it out. By providing an existing task ID, ClearML recreates the task’s environment on a remote machine. Instead of running the script, it installs a running VSCode server, giving you a full-fledged IDE to edit and debug code as if working locally - except now it’s running in the Cloud. This feature is incredibly powerful and effortless to use. It has become a go-to tool for our team.

User-Oriented MLOps Philosophy

As I see it, the purpose of MLOps is to make it as easy as possible for engineers to run training experiments, debug, and deploy models. ML engineers should be free to focus on the details of Machine Learning rather than the infrastructure behind it. The process should be frictionless, efficient in any resource you care about, standardized, and scalable. Any engineer should be able to join any project and quickly understand the code structure and how to run the models. Information should be standardized and easily accessible to everyone.

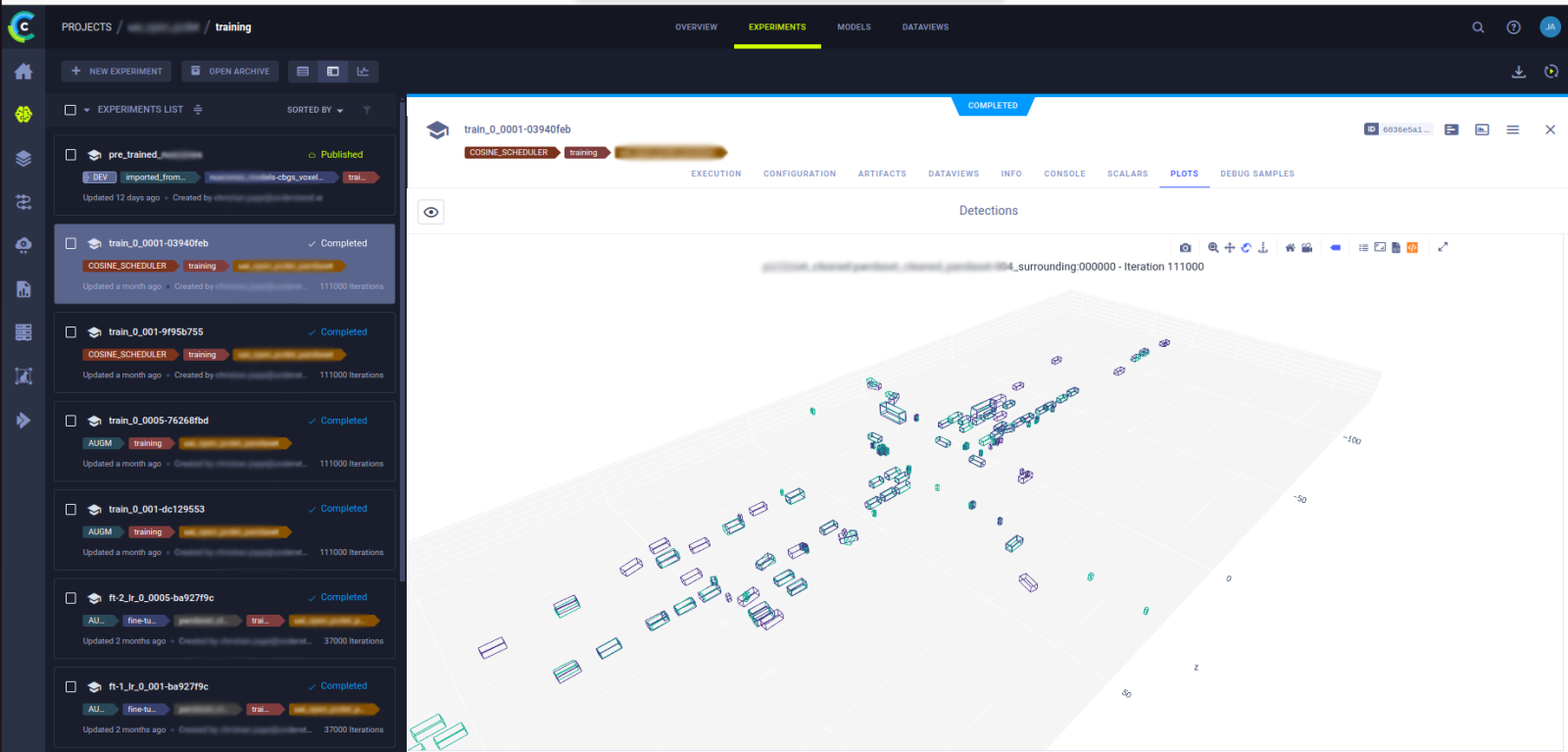

ClearML has significantly helped our team reduce friction in developing and deploying models and has standardized our processes along the way.