The ambitions for autonomous driving remain high and are slowly but steadily turning into reality, reshaping the future of mobility. While companies such as Waymo, Motional, and Tesla make advances in robotaxi services, autonomous trucking players like Torc, Plus.ai, or the Traton Group are pushing the boundaries of self-driving freight transport. However, this promising progress comes with its fair share of challenges: The industry faces immense pressure from volatile markets, geopolitical uncertainties, growing competition, and shrinking profit margins. These factors strain R&D budgets and inevitably slow the pace of innovation. In this climate, it’s essential for companies to emphasize efficiency and generate real value from every investment

At understand.ai, we recognize these pressures and respond by investing strategically in new technologies, standardizing processes, and creating synergies with our customers. This approach enables us to maximize value and accelerate progress even under difficult market conditions. Here is how we have tackled these challenges.

Leveraging Automation to Tackle Massive Data Annotation

One key area where improving efficiency is crucial is data annotation, which plays a vital role in developing autonomous vehicles. Manual labeling at the vast scale required is simply impractical. For example, labeling 50,000 kilometers of driving data - recorded at 10 frames per second with an average of 19 objects per frame - results in 36 million frames and over 680 million annotations. Manually processing this volume of data would demand roughly 3,400 person-years of work.

To address this, pre-labeling technologies have emerged, reducing the manual effort to approximately 1,300 person-years. However, our AI-driven automated approach is able to take this even further by cutting the required human effort down to just 330 person-years. Within this system, human intervention focuses primarily on quality assurance, averaging about five seconds per annotation.

Depending on project scale and quality requirements, this level of automation can reduce program costs by up to 90% compared to traditional manual labeling methods, enabling faster, more cost-effective development of autonomous systems.

Overcoming 3D LiDAR Annotation Challenges

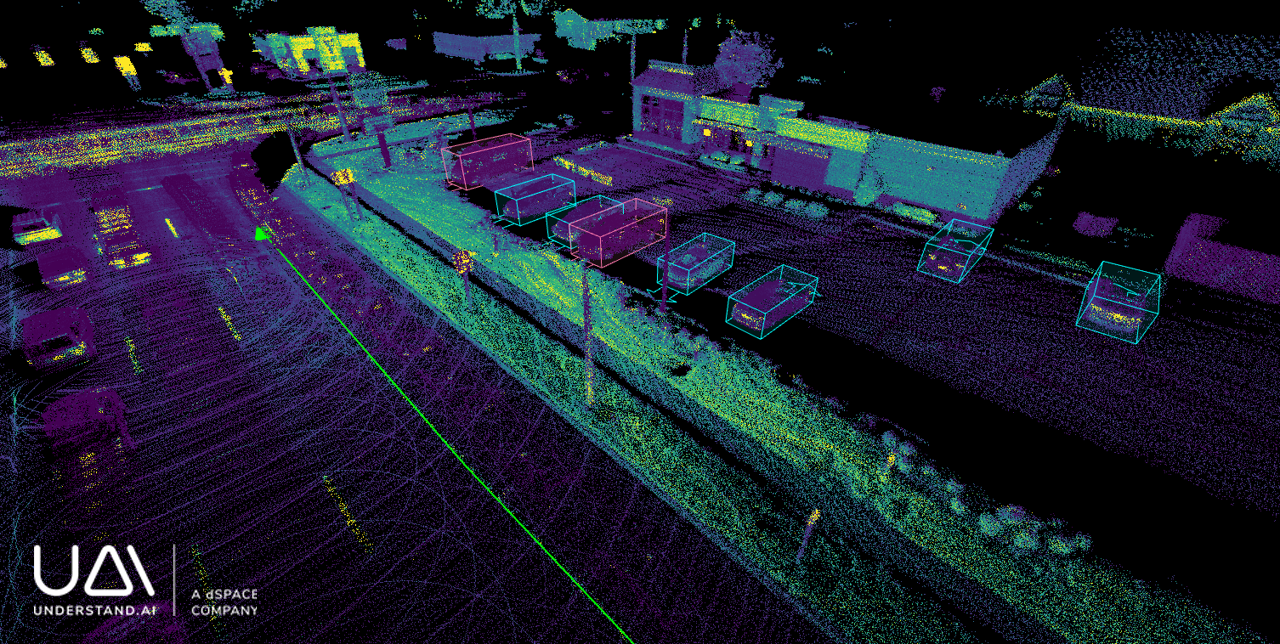

Another complex and demanding undertaking is the processing of 3D LiDAR point clouds. Unlike camera images, LiDAR data is sparser and less intuitive to interpret, making it difficult even for trained annotators to accurately detect distant vehicles, pedestrians, or two-wheelers. This complexity requires tailored strategies that combine cutting-edge technology with human expertise to maintain the high-quality ground truth essential for autonomous driving development.

Dynamic Object Detection Through Interpolation

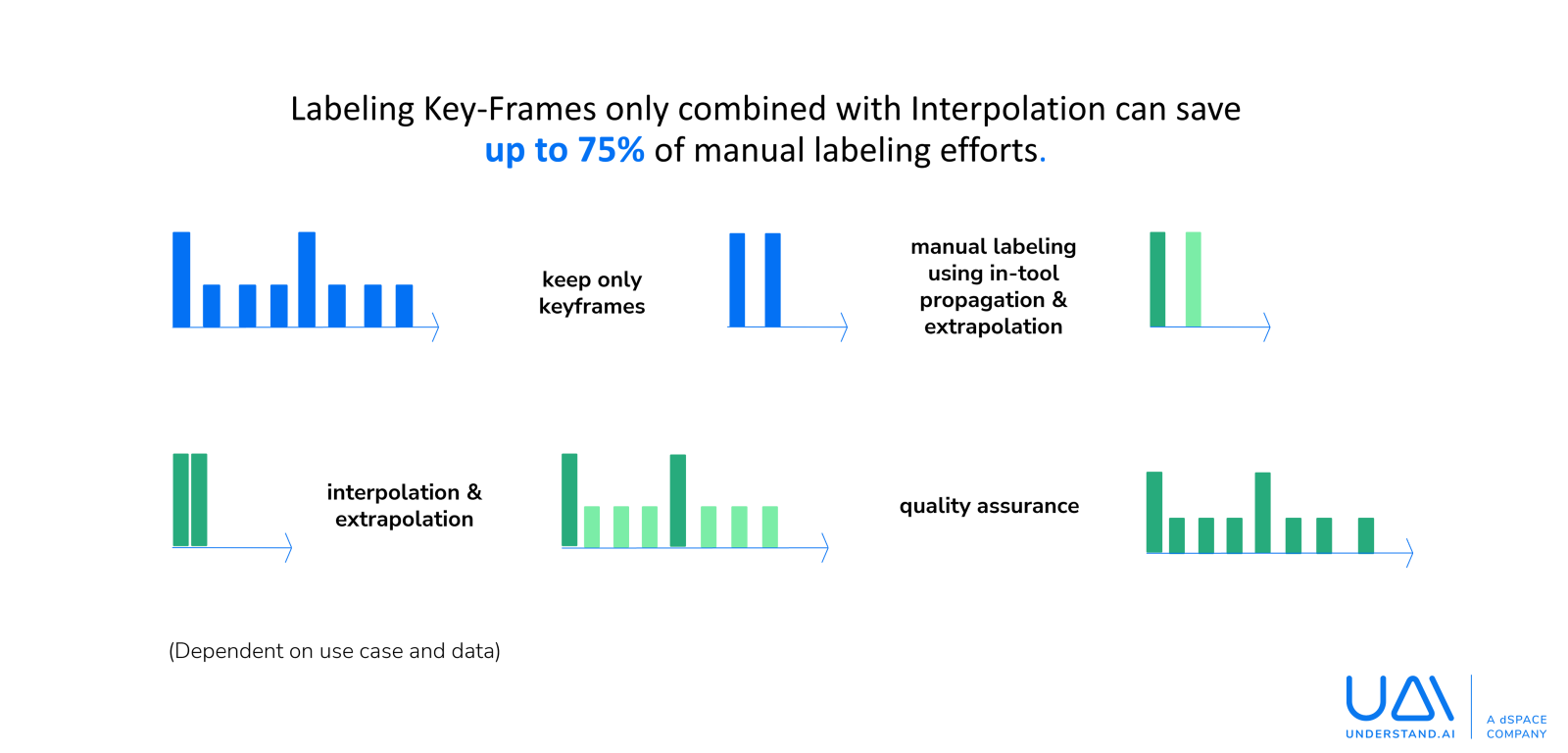

To boost efficiency when it comes to labeling dynamic objects, we leverage advanced interpolation techniques. Instead of manually annotating every frame, we begin by labeling key frames - typically every fourth frame - using in-tool propagation and extrapolation to assist annotators.

These key annotations are then interpolated across the intervening frames, followed by a human-in-the-loop quality check to ensure accuracy. This method can reduce manual labeling efforts in 3D point clouds by up to 75%, dramatically speeding up the annotation process without sacrificing quality.

Static Object Annotation via Point Cloud Stitching

For static objects, our approach centers on Point Cloud Stitching. By aggregating multiple 3D point clouds into a dense and optimized scene, we can annotate static elements just once and then propagate those labels frame-by-frame as needed. By leveraging precise ego-vehicle trajectories with minimal noise and highly accurate LiDAR calibration, this technique can cut manual labeling efforts by up to 90% in complex urban environments.

Process Optimization and Strategic Collaboration

Beyond technological innovations, we also continuously refine project workflows to enhance efficiency: Our loop process ensures iterative alignment with customers to clarify requirements, edge cases, and data quality issues early on - before scaling to mass production. This disciplined approach avoids costly rework, as skipping these steps has been shown to increase project costs by 23% due to unplanned changes and workflow disruptions. Furthermore, we apply lean production principles by standardizing workflows for common use cases and pre-training labeling teams, resulting in quality improvements and significant cost reductions.

Looking ahead, deeper collaboration between OEMs, Tier 1 suppliers, and annotation providers holds great promise for further efficiencies. By utilizing pre-labeled 3D data directly from perception stacks, we can focus our efforts on refining false negatives, false positives, and geometric errors - potentially reducing program costs by over 60% all-together.

Our flexible engagement models, encompassing full end-to-end services as well as hybrid approaches that combine internal and external resources, offer scalability tailored to project needs. Additionally, multi-cloud deployment strategies enable cost optimization by reducing expensive data egress fees, saving thousands of euros on large datasets.

Leveraging the dSPACE Ecosystem for End-to-End Efficiency

Lastly, of course, as part of the dSPACE family, understand.ai is naturally integrated with the dSPACE data-driven development toolchain. This includes Autera data logging, IVS data management, UAI labeling, and AI training tools such as Aurelion, Data Replay, and Simphera. Together, these tools help streamline workflows and support autonomous driving development, while further improving cost efficiency.

Our Commitment Moving Forward

In today’s challenging market environment, success in autonomous driving depends on embracing automation and interpolation technologies, optimizing processes through lean workflows, fostering closer industry collaboration, and leveraging integrated toolchains. At understand.ai, we remain dedicated to driving innovation while maximizing value and return on investment for our partners on the autonomous journey.