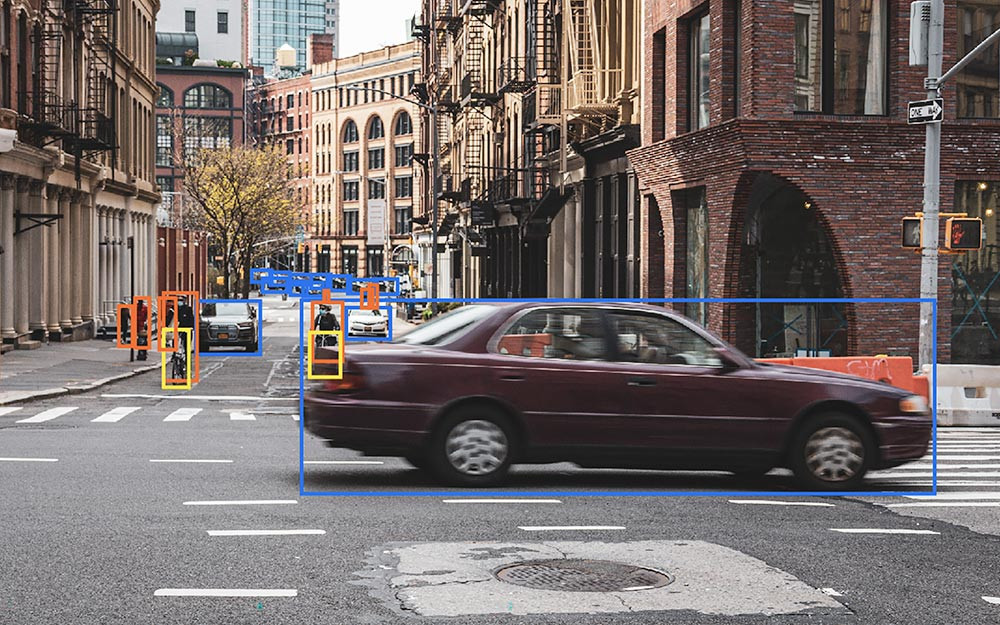

Request your free 2D annotation trial today!

Are you interested to find out how our Ground Truth solutions can elevate your project? We invite you to try us out with a free 2D annotation trial. Simply follow the button to submit your request, and our team handle the next steps.

Submit your request!