Get the documentation to our API!

Access the official documentation to our API - completely for free!

Get access!At understand.ai, we provide cutting-edge ground truth annotation technology to enable you to handle complexity at scale.

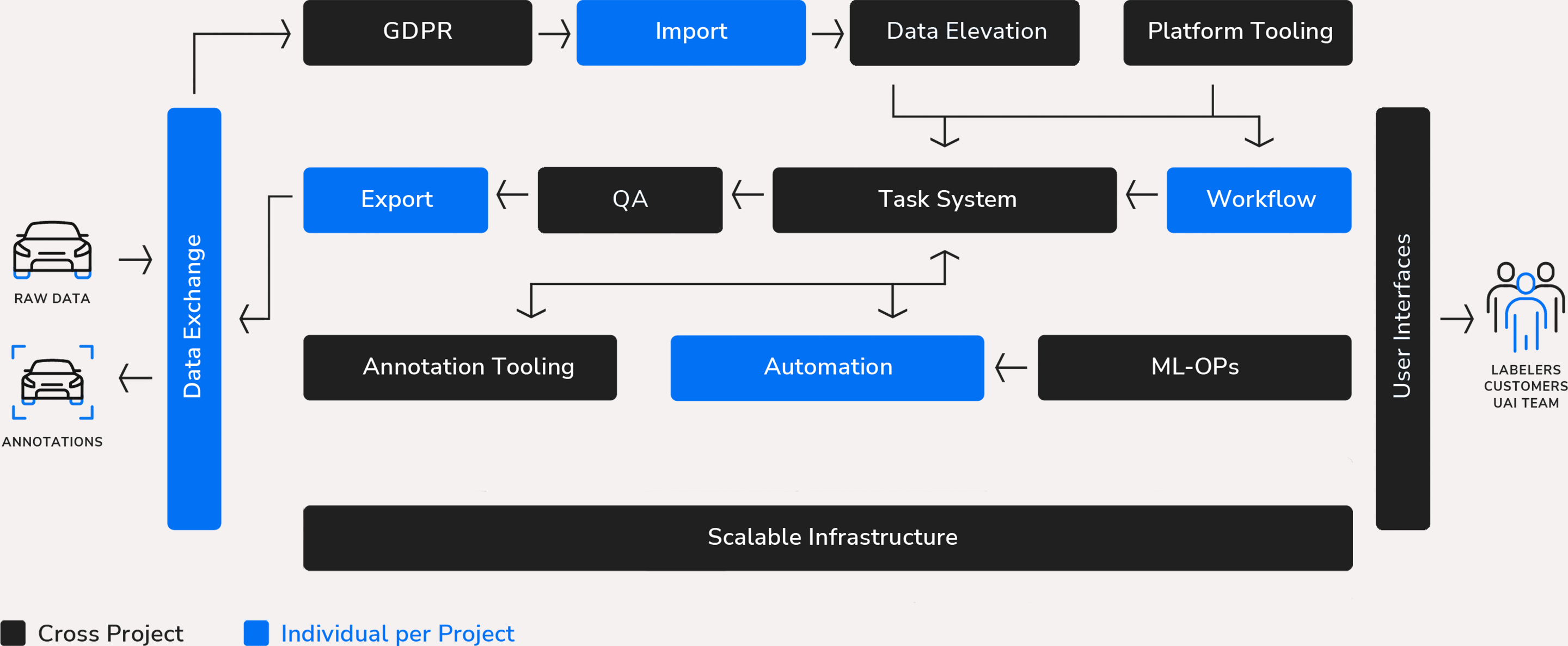

Introducing our state-of-the-art annotation platform, designed to realize complex ground truth annotation projects. With scalable infrastructure, it effortlessly handles high data volumes and projects of any size. Our platform excels in customized data elevation and workflows, tailored to meet specific project needs. We prioritize compliance, adhering to stringent data privacy and security standards.

The seamless integration of user-friendly tools enables streamlined collaboration between customers and labeling partners. Our automation capabilities significantly reduce manual annotation efforts, making large-scale ADAS/AD programs commercially feasible.

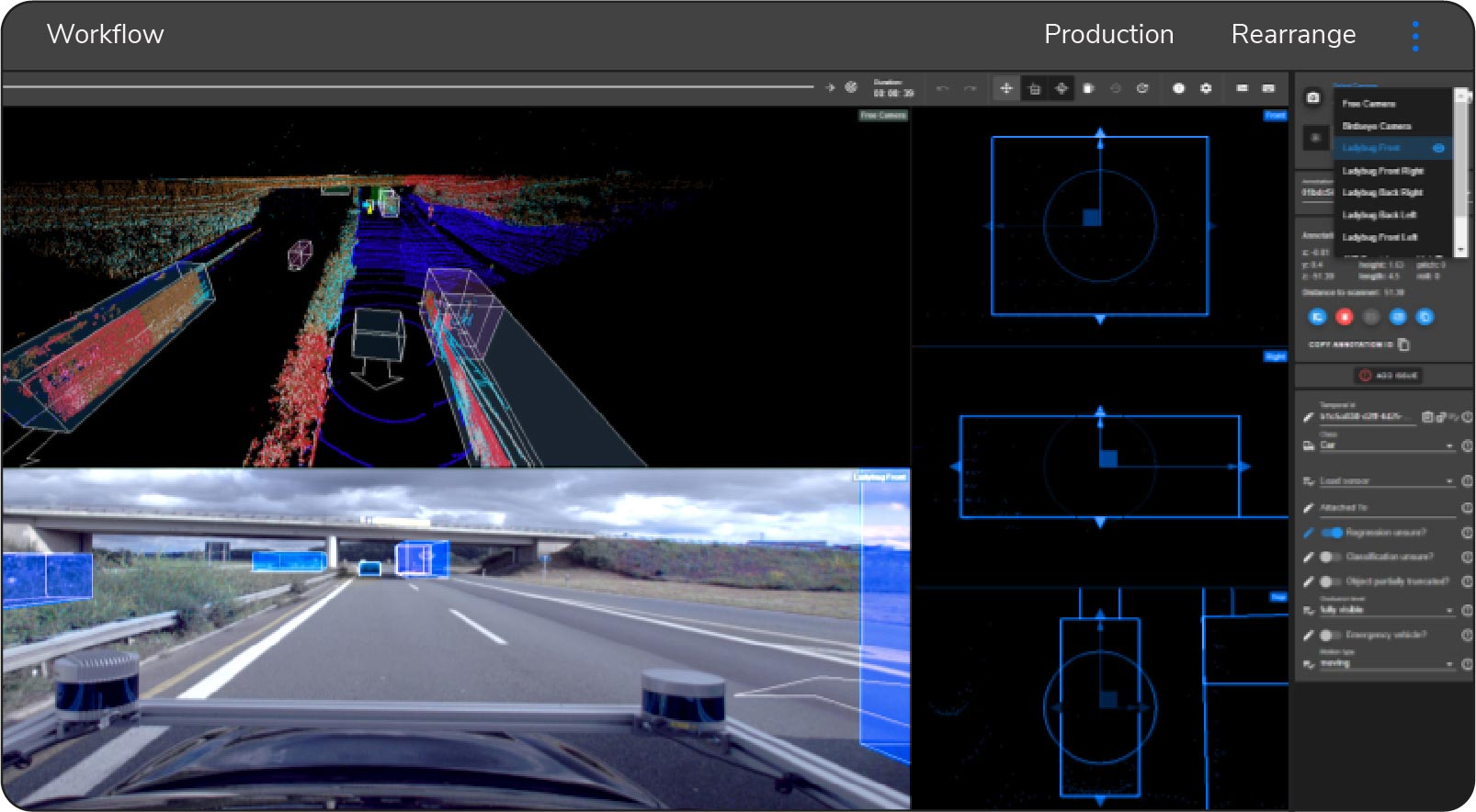

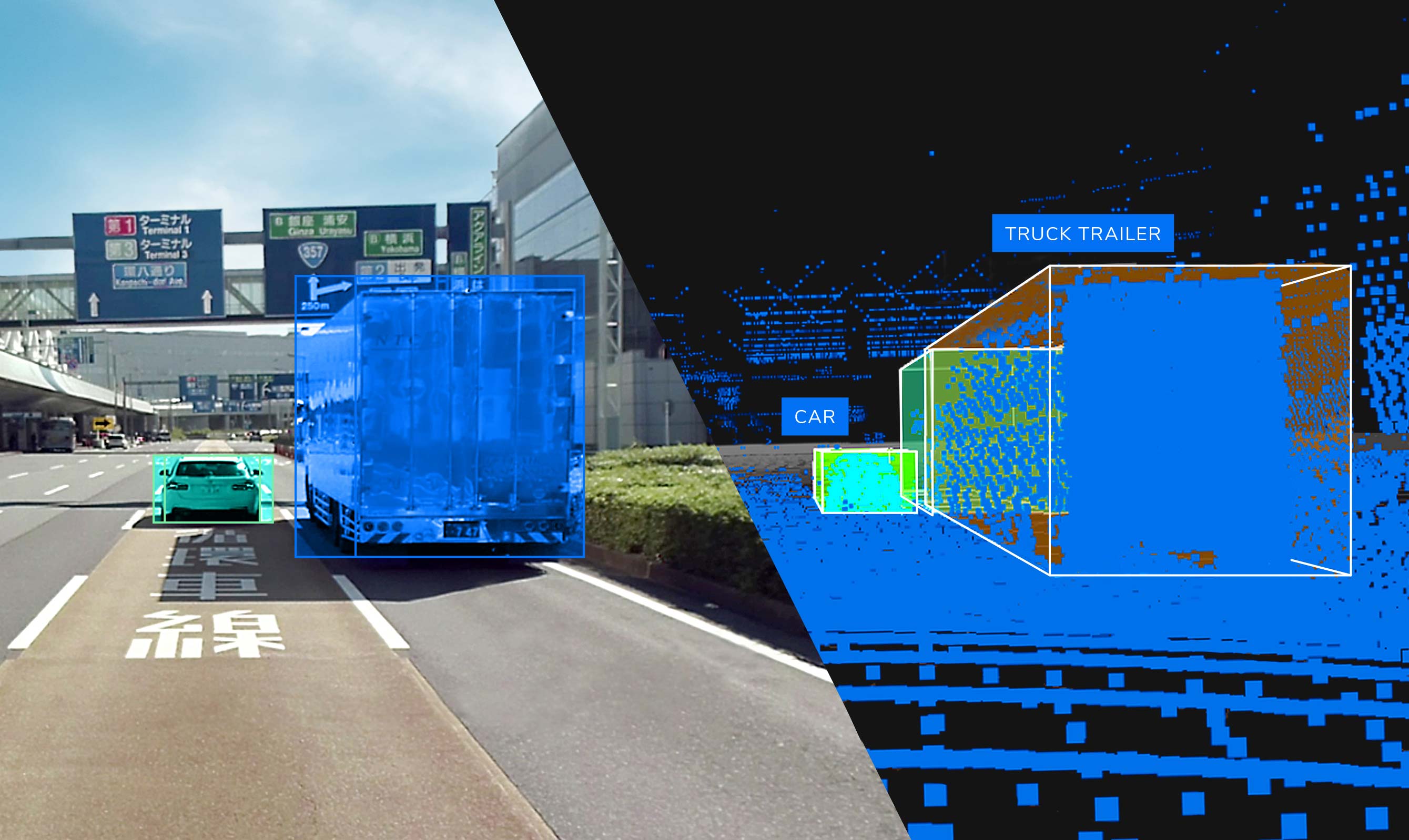

Our ground truth platform excels in multi-sensor-integration, facilitating seamless incorporation and processing of data from multiple lidar sensors. This provides a comprehensive view of complex 3D environments and enables precise annotation.

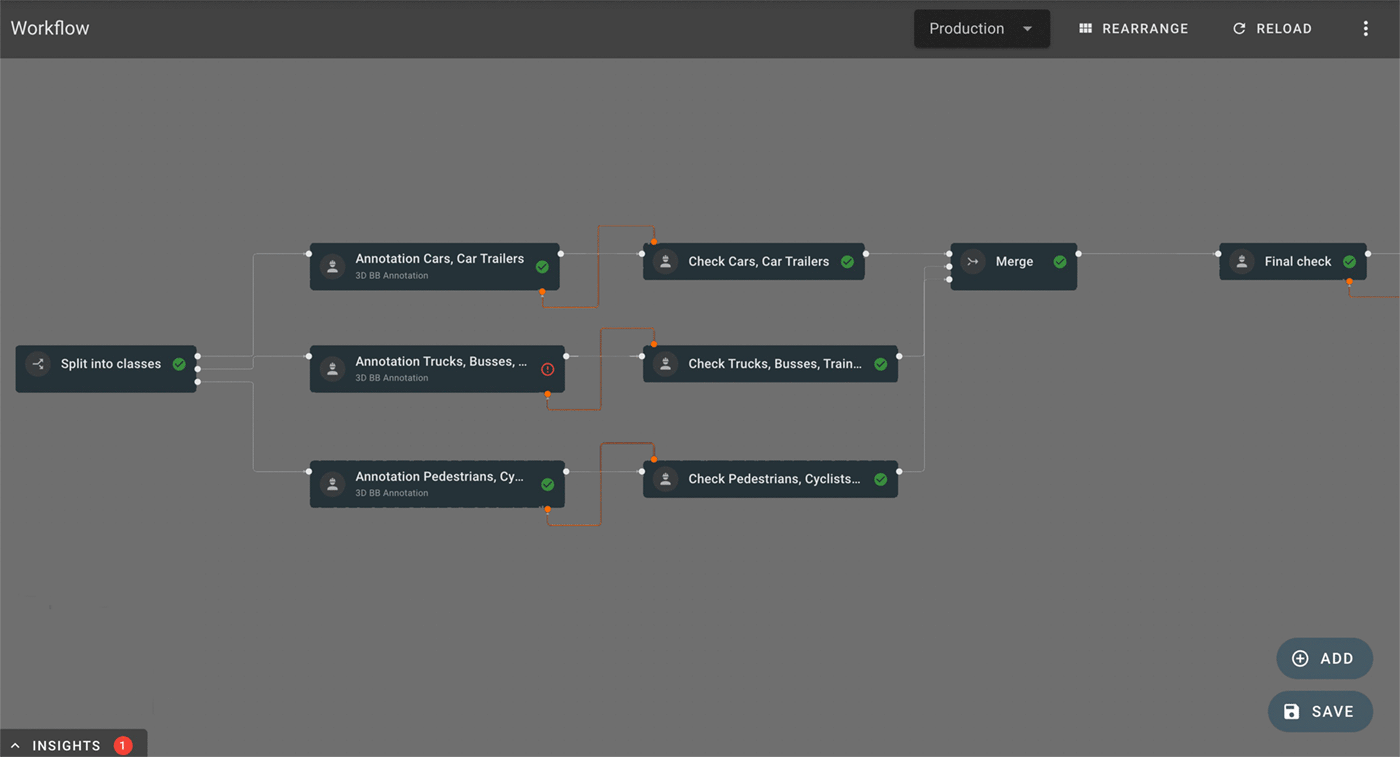

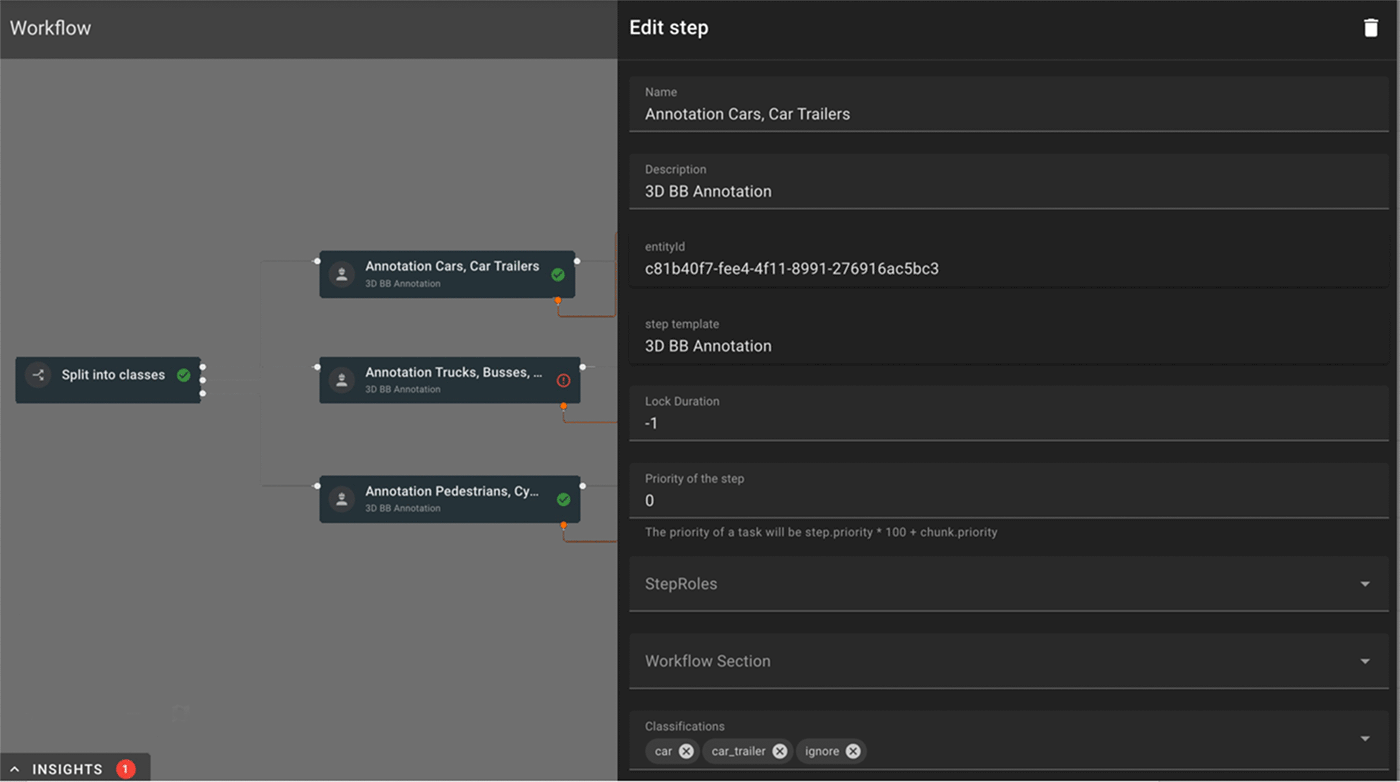

The Workflow Editor excels in user-friendliness and customization, providing an intuitive interface to create and modify annotation workflows. It enables streamlined tasks, enhanced efficiency, and offers features like intuitive navigation, task dependencies, and customizable instructions.

Within our Workflow Editor, extensive fine-tuning and customization possibilities of each annotation process step allow for flexible adapting and configuring of individual workflow steps to align with project requirements.

Our entire system is designed to scale rapidly on multiple cloud platforms to meet volume demands in the most cost-efficient way.

Access the official documentation to our API - completely for free!

Get access!

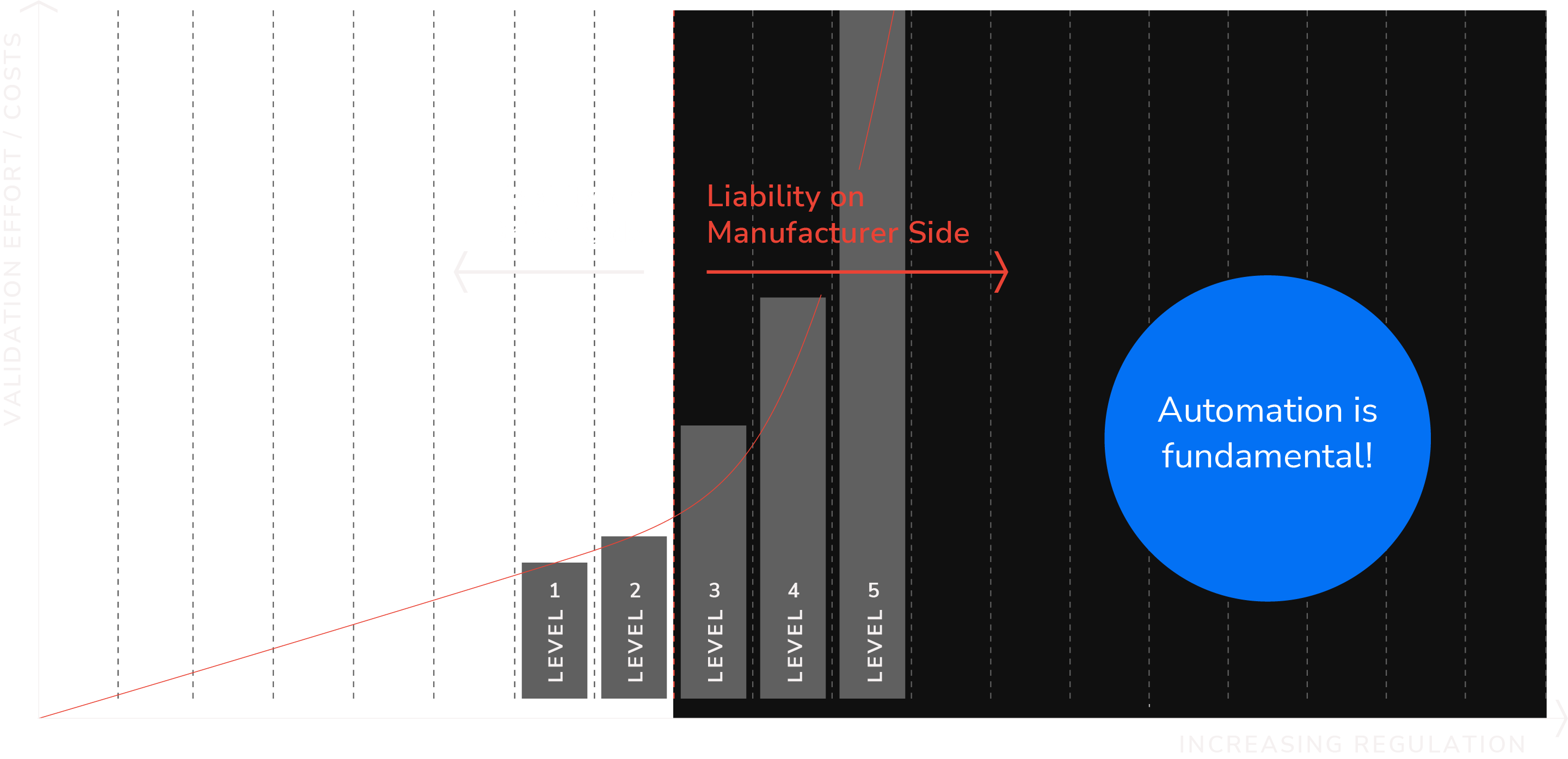

As the industry progresses to a higher level of autonomous driving responsibility shifts from the driver to the manufacturer. Companies developing ADAS/AD systems must increase their validation efforts to ensure system quality and reliability. To sustain large-scale validation projects, extensive labeling automation becomes essential.

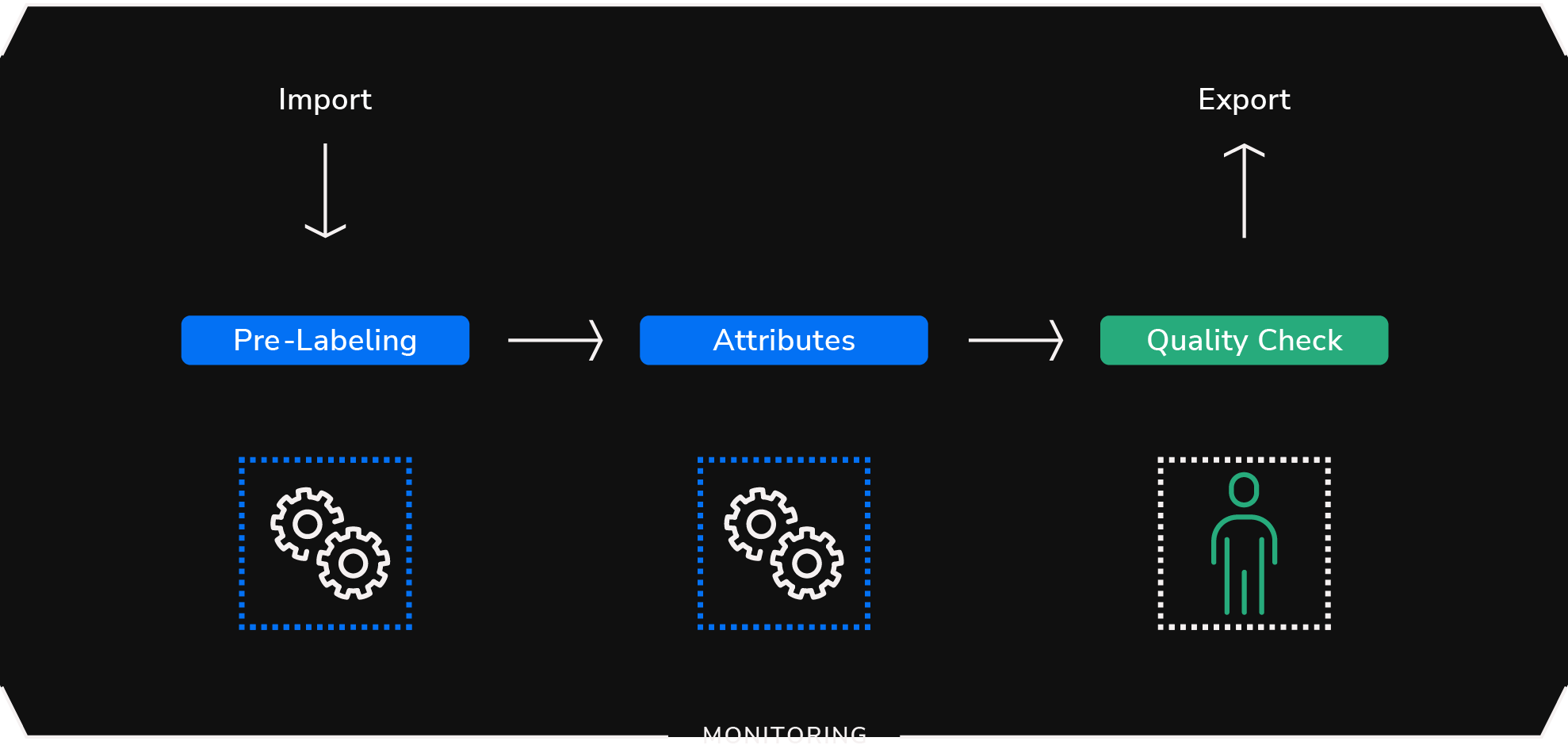

We address this challenge with the strategic use of labeling automation. Encompassing initial pre-labeling, attribute definition, and rigorous quality checks, our approach markedly minimizes the need for manual annotation and enables us to precisely annotate large datasets across a wide range of use cases.

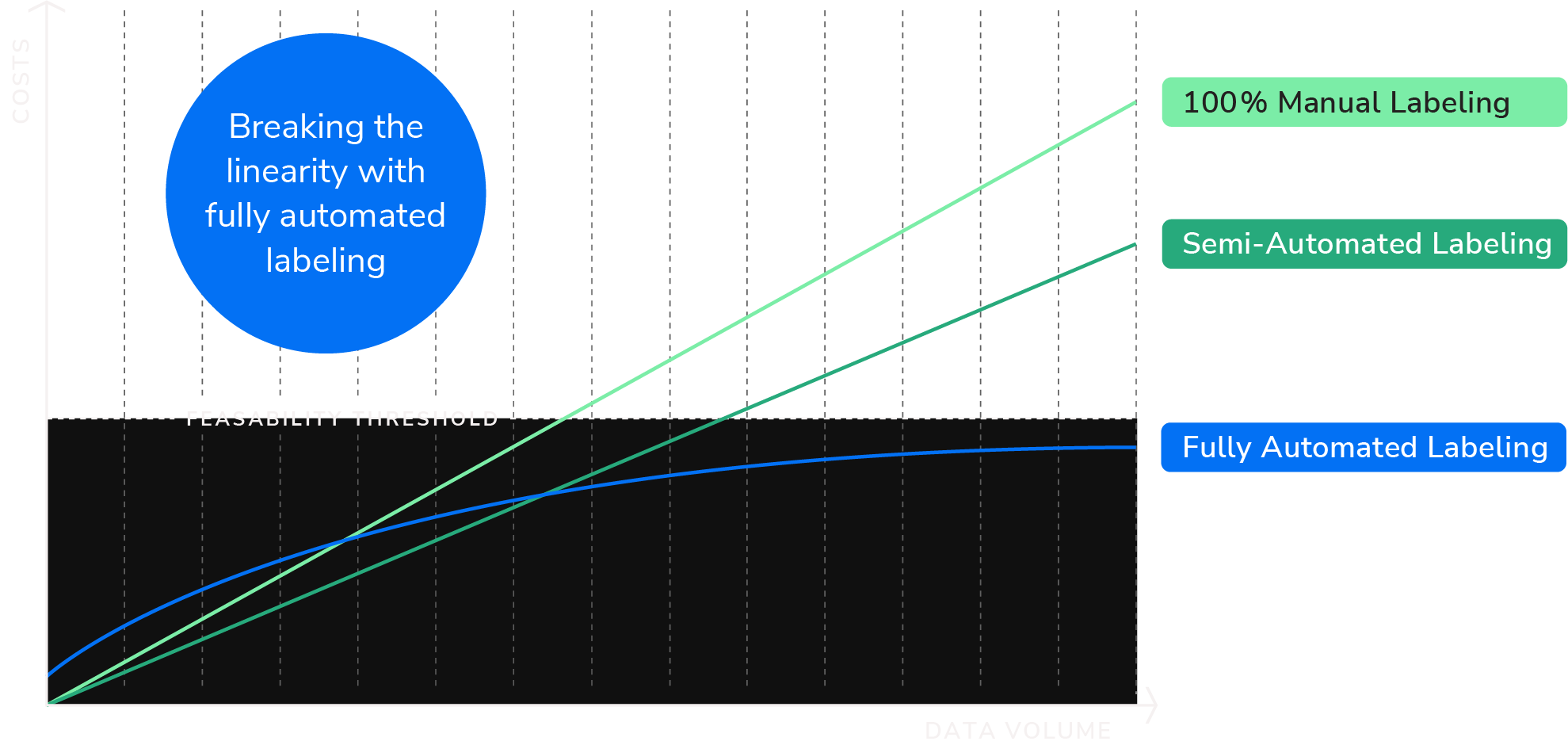

By implementing labeling automation, we effectively decouple the linear cost growth of manual labeling work from data volume, enabling us to achieve affordable and consistent high-quality annotations at scale.

Our automation capabilities span a wide range of both 2D and 3D automation tasks to successfully meet all requirements for your annotation project.

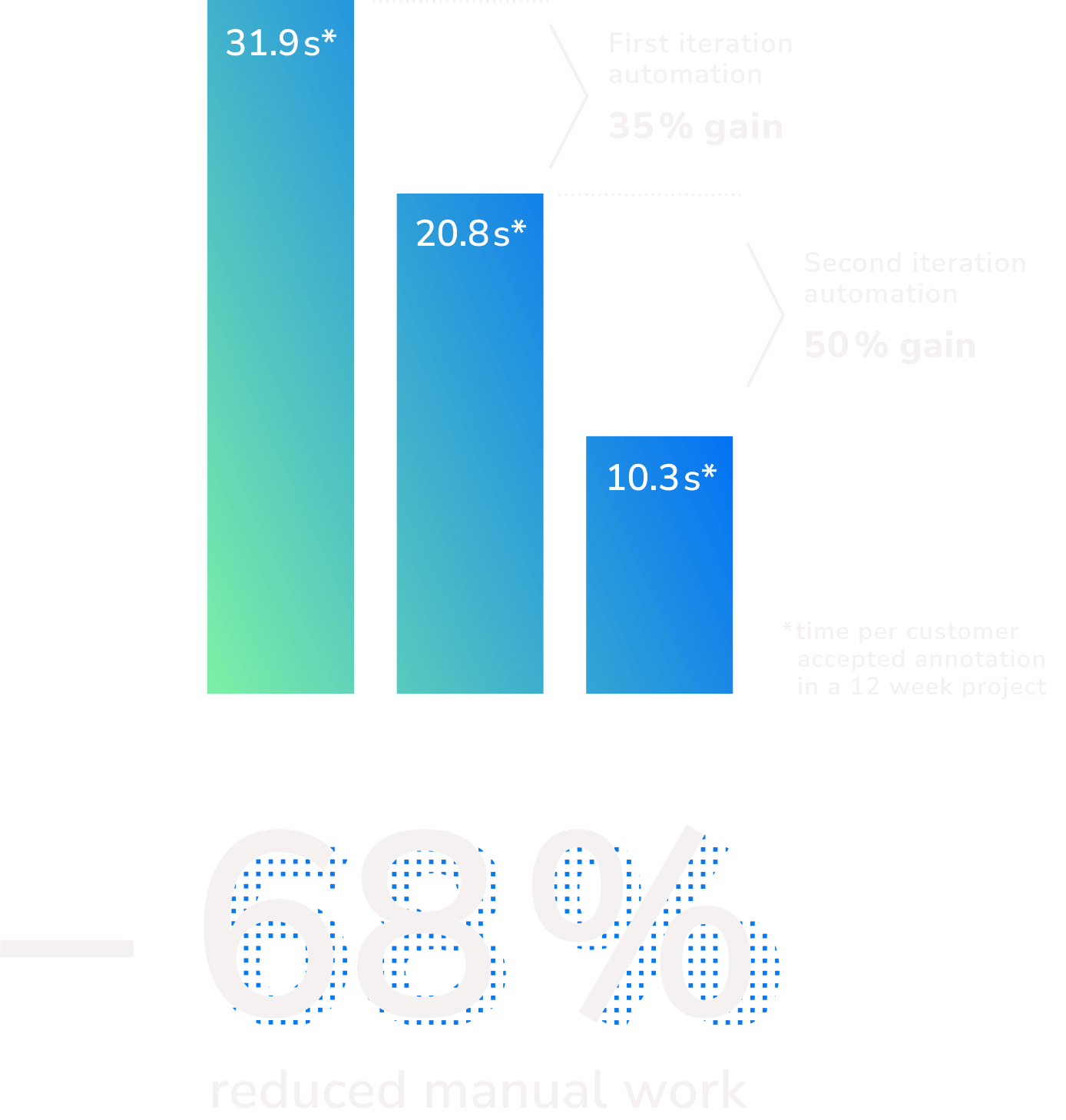

Significant automation improvements can be achieved after only 2 training iterations!

Explore this following reference case to gain deeper insight of our successful collaboration with one of our customers.

In this reference case, we successfully delivered 23 million objects as 2D Bounding Boxes as a result of labeling automation. The annotations overachieved set quality targets and were able to be provided within critical time restraints.